Precision RAG: Enterprise Grade

08 Jan 2024

Prompt Tuning For Building Enterprise Grade RAG Systems

Introduction

RAG stands for Retrieval-Augmented Generation, which is a powerful and flexible method for retrieving and generating natural language with large language models. It consists of Retrieval-based approaches that use a large collection of documents or knowledge sources to find relevant information for a given query or context, and Generative approaches that use a large neural network or language model to generate natural language from scratch or based on some input.

RAG combines these two approaches by using a retrieval system to select relevant documents from a knowledge source and then using a generative model to produce natural language that incorporates information from the retrieved documents.

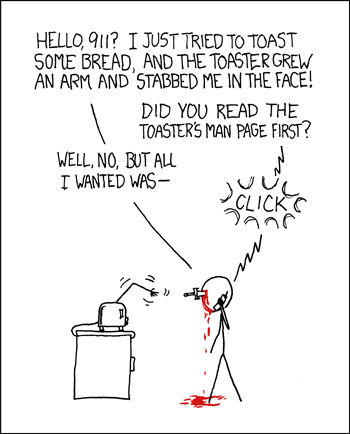

I’ve had instructors address a whole class and say, “There’s no such thing as a stupid question.” I now know that is in fact not true because I’ve challenged the statement and received the appropriate dumb-stricken, annoyed look. There are definitely stupid questions, and along with that, usually unhelpful answers. Though we all might be guilty of being callous and making people victim to our poorly formed questions, there are steps we can take to ask smarter questions that hopefully don’t illicit the dreaded “rtfm” or “stfw” response.

Challenges

First of all, public LLMs, like OpenAI’s ChatGPT or Google’s Bard have been trained on publicly available data, which has two important implications. They are not able to capture information released after their training cut-off and they did not have access to non-public or company-specific data during their training. Consequently, these models are missing out on essential and up-to-date information and context, making them a not-so-ideal solution for the automatic generation of text where company-specific information is required.

For the challenge, Using the Principles of RAG we are going to design a method to enhance an LLM’s information retrieval capabilities by drawing context from external documents. However, in certain use cases additional context is not enough. If a per-trained LLM struggles with summarizing financial data or drawing insights on some specifically queried data, it is hard to see how additional context in the form of a single document could help.

General Structure

Using the method of context learning, a new data has been handed in, we will take in that input and store it in a vector database so it is embedded and ready for the next steps. The data will be chunked and converted into n-dimensions(Vectored). That will be fed to the LLM(Large Language Model) where the LLM will generate a Natural Language back to us with an answer (in this case a set of prompts or An evaluation of the prompt, depending on the user’s desire). Through our back end, we will be going through Automatic Evaluation Data Generation Service using RAGAS and Prompt Testing and Ranking Service using Monte Carlo Matchmaking and ELO Rating System.

So to implement this we have several steps to follow including

- Define the problem and the solution.

- Design the architecture and the interface.

- Implement the code and the logic.

- Test and debug the application.

- Deploy and monitor the application.

- Iterate and improve the application.

Deep diving into the RAG Model

RAG models require a lot of resources and time to run, as they involve multiple components and models, such as the query encoder, the retriever, the generator, and the knowledge source. RAG models also generate a lot of data and intermediate results, such as the query vector, the retrieved documents, the token probabilities, etc. These factors can affect the speed and the memory efficiency of the RAG model, and limit its scalability and usability.

To optimize the RAG model for speed and memory efficiency, we need to follow some steps. These steps are:

Choose the right RAG variant. We need to decide which RAG variant to use, depending on the task and the data. (RAG-Token and RAG-Sequence.) RAG-Token retrieves documents for each token generated by the generator, while RAG-Sequence retrieves documents for the whole input query or context. RAG-Token is more flexible and diverse,

but also more expensive and complex, as it requires more retrieval and generation steps. RAG-Sequence is more consistent and coherent, but also more rigid and limited, as it uses a fixed set of documents for the whole output generation. Therefore, in our case a RAG- Sequence seems doable and that best suits our needs and constraints with respect to our resource limitations.

Choosing the right query encoder. We need to decide which query encoder to use, depending on the input and the output.

Choosing the right retriever. We need to decide which retriever to use, depending on the knowledge source and the relevance.

-

Choosing the right generator. We need to decide which generator to use, depending on the output and the quality. In this case we have ChatGPT as a generator.

-

Choosing the right knowledge source. We need to decide which knowledge source to use, depending on the domain and the freshness. In our case we have gone with the challenge document and it’s details.

-

Choosing the right parameters and settings. We need to decide which parameters and settings to use, depending on the resources and the performance. The parameters and settings are the values and options that control the behavior and the output of the RAG model. The parameters and settings can include the batch size, the learning rate, the number of epochs, the beam size, the temperature, the top-k, the top-p, etc. The parameters and settings are responsible for optimizing the speed and the memory efficiency of the RAG model, as well as the performance and the quality of the RAG model.

Conclusion

This was a very interesting challenge and one that really adds to the skill sets in one’s advancement. This seems like a potential lucrative business and further practicing such innovative engineering’s will help the Tech industry a great deal.

Completing the whole task was the hindrance I faced due to the limitation of time. As well as getting to the roots of understanding the concepts was another drive up the hill. But nonetheless ahuge achievement was made.